%%capture

%pip install -U mlflow openai

dbutils.library.restartPython()%%capture

%pip install python-dotenv rich

from dotenv import load_dotenv

# Load environment variables from a .env file

load_dotenv()import mlflow

mlflow.openai.autolog()from openai import OpenAI, pydantic_function_tool

from documents import PassportBasic, PassportFull, i797, Other

import os

import base64

from IPython.display import Image, display

from pydantic import BaseModel

from typing import List, Optional

from rich import print

DATABRICKS_TOKEN = os.environ.get("DATABRICKS_TOKEN")

DATABRICKS_BASE_URL = os.environ.get("DATABRICKS_BASE_URL")

DATABRICKS_MODEL_NAME = os.environ.get("DATABRICKS_MODEL_NAME")

client = OpenAI(

api_key=DATABRICKS_TOKEN, # your personal access token

base_url=DATABRICKS_BASE_URL,

)

# Function to encode image to Base64

def encode_image_to_base64(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

def extract_and_show_document(document, tools):

base64_document = encode_image_to_base64(document)

completion_args = {

"messages": [

{

"role": "user",

"content": [

{ "type": "text", "text": "Given the provided document, pick which extractor tool to call"

"and extract the relevant fields strictly "

"as JSON arguments. The 'other' tool is provided as a fallback if none of the others are a good fit" },

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_document}",

},

},

],

}

],

"model": DATABRICKS_MODEL_NAME,

"tools": tools

}

chat_completion = client.beta.chat.completions.parse(**completion_args)

display(Image(document))

# print(response.parsed.model_dump_json(indent=4))

return chat_completiondef get_tools(models: List[BaseModel]):

return [pydantic_function_tool(model) for model in models]

tools = get_tools([PassportBasic, PassportFull, i797, Other])

print(tools)[ { 'type': 'function', 'function': { 'name': 'PassportBasic', 'strict': True, 'parameters': { 'properties': { 'passport_type': {'title': 'Passport Type', 'type': 'string'}, 'name': {'title': 'Name', 'type': 'string'}, 'number': {'title': 'Number', 'type': 'string'} }, 'required': ['passport_type', 'name', 'number'], 'title': 'PassportBasic', 'type': 'object', 'additionalProperties': False } } }, { 'type': 'function', 'function': { 'name': 'PassportFull', 'strict': True, 'parameters': { 'properties': { 'passport_type': {'title': 'Passport Type', 'type': 'string'}, 'name': {'title': 'Name', 'type': 'string'}, 'number': {'title': 'Number', 'type': 'string'}, 'country_code': {'title': 'Country Code', 'type': 'string'}, 'nationality': {'title': 'Nationality', 'type': 'string'}, 'date_of_birth': {'title': 'Date Of Birth', 'type': 'string'}, 'sex': {'title': 'Sex', 'type': 'string'}, 'place_of_birth': {'title': 'Place Of Birth', 'type': 'string'}, 'date_of_issue': {'title': 'Date Of Issue', 'type': 'string'}, 'date_of_expiration': {'title': 'Date Of Expiration', 'type': 'string'}, 'issuing_authority': {'title': 'Issuing Authority', 'type': 'string'} }, 'required': [ 'passport_type', 'name', 'number', 'country_code', 'nationality', 'date_of_birth', 'sex', 'place_of_birth', 'date_of_issue', 'date_of_expiration', 'issuing_authority' ], 'title': 'PassportFull', 'type': 'object', 'additionalProperties': False } } }, { 'type': 'function', 'function': { 'name': 'i797', 'strict': True, 'parameters': { 'properties': { 'receipt_number': {'title': 'Receipt Number', 'type': 'string'}, 'case_type': {'title': 'Case Type', 'type': 'string'}, 'receipt_date': {'format': 'date', 'title': 'Receipt Date', 'type': 'string'}, 'priority_date': {'format': 'date', 'title': 'Priority Date', 'type': 'string'}, 'notice_date': {'format': 'date', 'title': 'Notice Date', 'type': 'string'}, 'page': {'title': 'Page', 'type': 'string'}, 'petitioner': {'title': 'Petitioner', 'type': 'string'}, 'beneficiary': {'title': 'Beneficiary', 'type': 'string'}, 'notice_type': {'title': 'Notice Type', 'type': 'string'}, 'section': {'title': 'Section', 'type': 'string'} }, 'required': [ 'receipt_number', 'case_type', 'receipt_date', 'priority_date', 'notice_date', 'page', 'petitioner', 'beneficiary', 'notice_type', 'section' ], 'title': 'i797', 'type': 'object', 'additionalProperties': False } } }, { 'type': 'function', 'function': { 'name': 'Other', 'strict': True, 'parameters': { 'properties': {'description': {'title': 'Description', 'type': 'string'}}, 'required': ['description'], 'title': 'Other', 'type': 'object', 'additionalProperties': False } } } ]

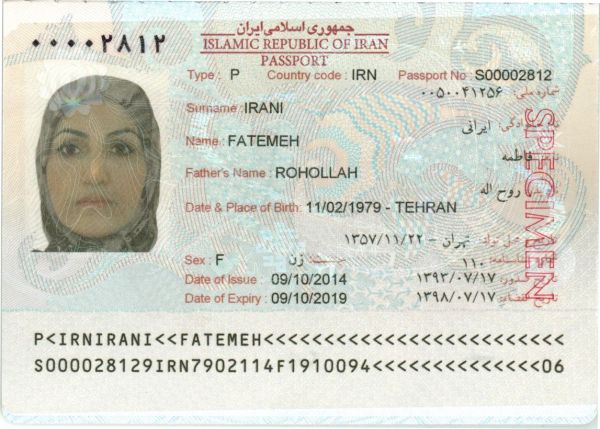

response = extract_and_show_document("samples/passport-iran.jpg", tools)

print(response)

extracted_data = response.choices[0].message.tool_calls[0].function.parsed_arguments

print(f"Classification: {extracted_data.__class__.__name__}")

print(f"Extraction: {extracted_data.model_dump_json()}")ParsedChatCompletion[NoneType]( id='chatcmpl-BpJGPSOSGGjOQhJqpI6tdMAZ3oH6k', choices=[ ParsedChoice[NoneType]( finish_reason='tool_calls', index=0, logprobs=None, message=ParsedChatCompletionMessage[NoneType]( content=None, refusal=None, role='assistant', annotations=[], audio=None, function_call=None, tool_calls=[ ParsedFunctionToolCall( id='call_FctRvhkqXGlqMok0fVl5vhIS', function=ParsedFunction( arguments='{"number":"S00002812","name":"FATEMEH IRANI","issuing_authority":"ISLAMIC REPUBLIC OF IRAN","passport_type":"P","place_of_birth":"TEHRAN","date_of_expiration":"09/10/2019","sex":"F","date_of_issue":"09 /10/2014","country_code":"IRN","date_of_birth":"11/02/1979","nationality":"IRAN"}', name='PassportFull', parsed_arguments=PassportFull( passport_type='P', name='FATEMEH IRANI', number='S00002812', country_code='IRN', nationality='IRAN', date_of_birth='11/02/1979', sex='F', place_of_birth='TEHRAN', date_of_issue='09/10/2014', date_of_expiration='09/10/2019', issuing_authority='ISLAMIC REPUBLIC OF IRAN' ) ), type='function' ) ], parsed=None ) ) ], created=1751568477, model='gpt-4.1-mini-2025-04-14', object='chat.completion', service_tier='default', system_fingerprint='fp_6f2eabb9a5', usage=CompletionUsage( completion_tokens=104, prompt_tokens=765, total_tokens=869, completion_tokens_details=CompletionTokensDetails( accepted_prediction_tokens=0, audio_tokens=0, reasoning_tokens=0, rejected_prediction_tokens=0 ), prompt_tokens_details=PromptTokensDetails(audio_tokens=0, cached_tokens=0) ) )

Classification: PassportFull

Extraction: {"passport_type":"P","name":"FATEMEH IRANI","number":"S00002812","country_code":"IRN","nationality":"IRAN","date_of_birth":"11/02/1979","sex":"F","place _of_birth":"TEHRAN","date_of_issue":"09/10/2014","date_of_expiration":"09/10/2019","issuing_authority":"ISLAMIC REPUBLIC OF IRAN"}

response = extract_and_show_document("samples/passport-iran.jpg", tools)

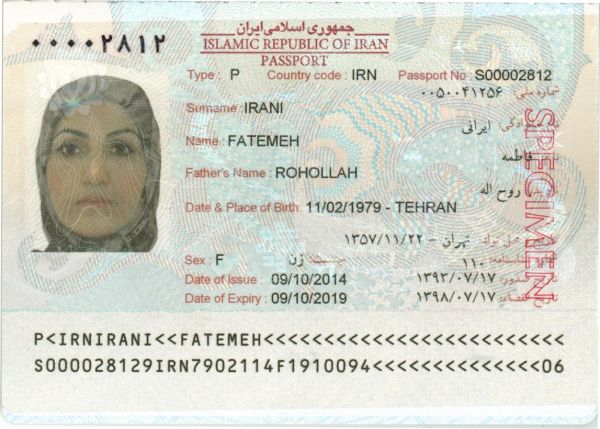

responsei797 = extract_and_show_document("samples/i-797c-sample.jpg", tools)

extracted_data = responsei797.choices[0].message.tool_calls[0].function.parsed_arguments

print(f"Classification: {extracted_data.__class__.__name__}")

print(f"Extraction: {extracted_data.model_dump_json()}")Classification: i797

Extraction: {"receipt_number":"WAC-1","case_type":"I130 PETITION FOR ALIEN RELATIVE","receipt_date":"2010-11-01","priority_date":"2010-10-28","notice_date":"2015-01-29","page":"1 of 1","petitioner":"John Doe","beneficiary":"Jane Doe","notice_type":"Approval Notice","section":"Brother or sister of US Citizen, 203(a)(4) INA"}

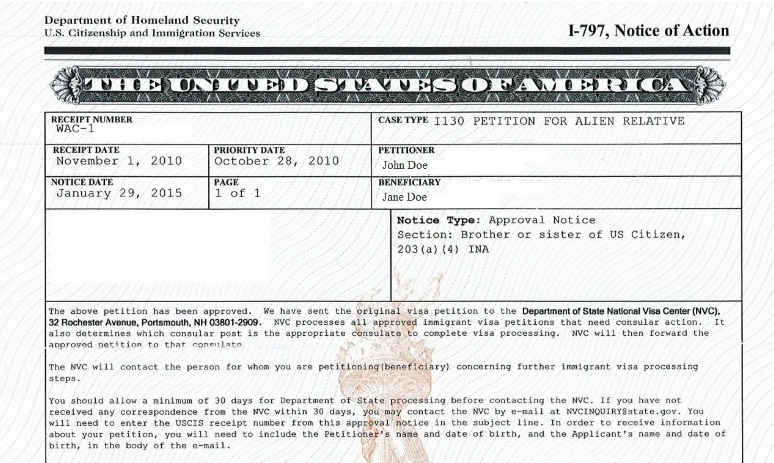

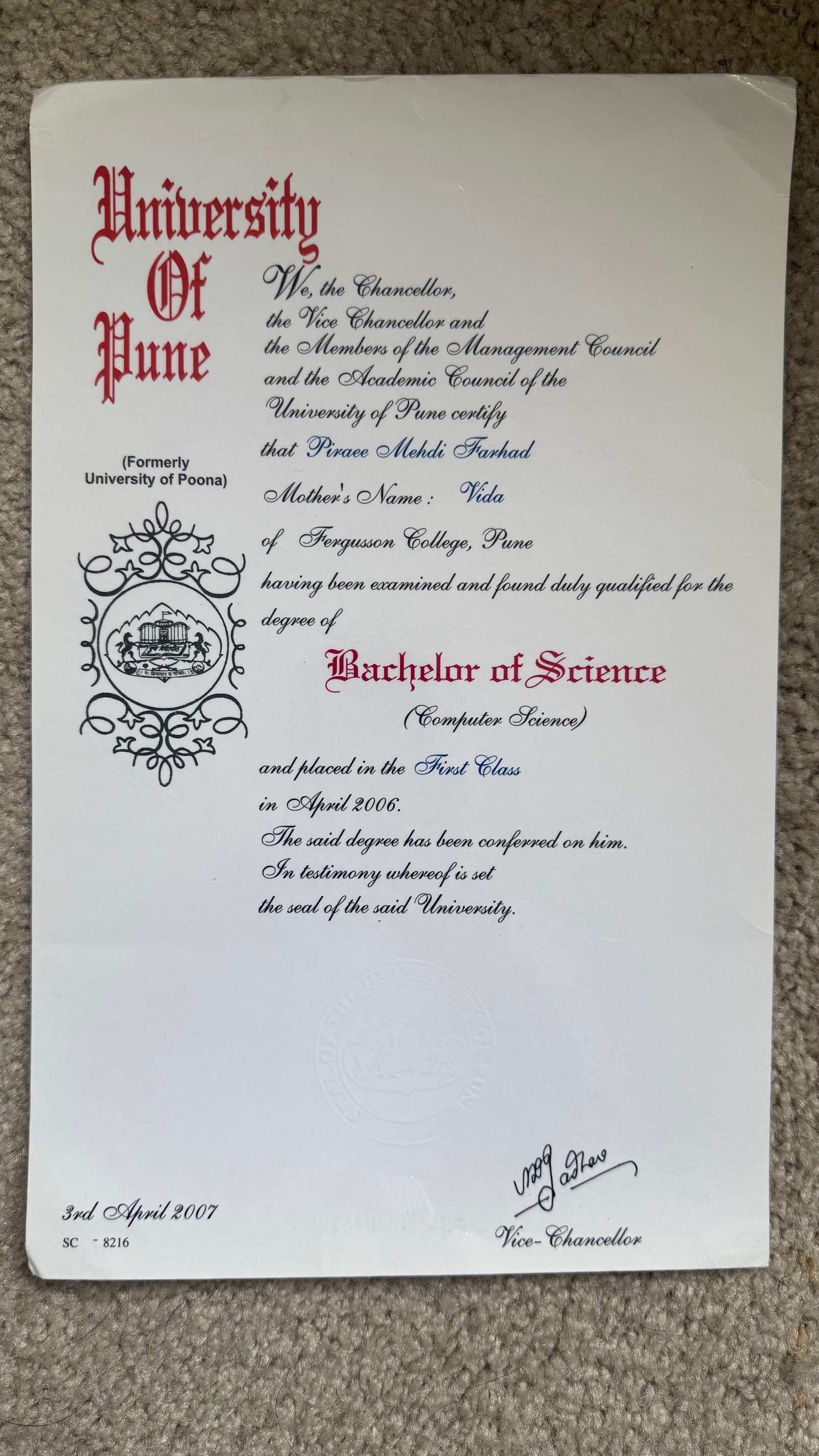

response_diploma = extract_and_show_document("samples/diploma-bachelors-sample.jpg", tools)

extracted_data = response_diploma.choices[0].message.tool_calls[0].function.parsed_arguments

print(f"Classification: {extracted_data.__class__.__name__}")

print(f"Extraction: {extracted_data.model_dump_json()}")

Classification: Other

Extraction: {"description":"This is a university degree certificate from the University of Pune. It is not a passport or i797 notice."}

from pydantic import BaseModel

from datetime import date

class Diploma(BaseModel):

name: str

degree: str

field: str

institution: str

date_issued: date

response = extract_and_show_document("samples/diploma-bachelors-sample.jpg", tools)--------------------------------------------------------------------------- TypeError Traceback (most recent call last) File <command-4812577713911404>, line 11 8 institution: str 9 date_issued: date ---> 11 response = extract_and_show_document("samples/diploma-bachelors-sample.jpg", Diploma) File <command-4812577713911398>, line 48, in extract_and_show_document(document, tools) 26 base64_document = encode_image_to_base64(document) 27 completion_args = { 28 "messages": [ 29 { (...) 45 "tools": tools 46 } ---> 48 chat_completion = client.beta.chat.completions.parse(**completion_args) 50 display(Image(document)) 51 # print(response.parsed.model_dump_json(indent=4)) File /local_disk0/.ephemeral_nfs/envs/pythonEnv-8178295e-052c-4ae3-9548-4d8e1c58d0af/lib/python3.10/site-packages/openai/resources/chat/completions/completions.py:166, in Completions.parse(self, messages, model, audio, response_format, frequency_penalty, function_call, functions, logit_bias, logprobs, max_completion_tokens, max_tokens, metadata, modalities, n, parallel_tool_calls, prediction, presence_penalty, reasoning_effort, seed, service_tier, stop, store, stream_options, temperature, tool_choice, tools, top_logprobs, top_p, user, web_search_options, extra_headers, extra_query, extra_body, timeout) 84 def parse( 85 self, 86 *, (...) 122 timeout: float | httpx.Timeout | None | NotGiven = NOT_GIVEN, 123 ) -> ParsedChatCompletion[ResponseFormatT]: 124 """Wrapper over the `client.chat.completions.create()` method that provides richer integrations with Python specific types 125 & returns a `ParsedChatCompletion` object, which is a subclass of the standard `ChatCompletion` class. 126 (...) 164 ``` 165 """ --> 166 _validate_input_tools(tools) 168 extra_headers = { 169 "X-Stainless-Helper-Method": "chat.completions.parse", 170 **(extra_headers or {}), 171 } 173 def parser(raw_completion: ChatCompletion) -> ParsedChatCompletion[ResponseFormatT]: File /local_disk0/.ephemeral_nfs/envs/pythonEnv-8178295e-052c-4ae3-9548-4d8e1c58d0af/lib/python3.10/site-packages/openai/lib/_parsing/_completions.py:45, in validate_input_tools(tools) 42 if not is_given(tools): 43 return ---> 45 for tool in tools: 46 if tool["type"] != "function": 47 raise ValueError( 48 f"Currently only `function` tool types support auto-parsing; Received `{tool['type']}`", 49 ) TypeError: 'ModelMetaclass' object is not iterable

response = extract_and_show_document("samples/Coursera-certificate.pdf", tools)

extracted_data = response.choices[0].message.tool_calls[0].function.parsed_arguments

print(f"Classification: {extracted_data.__class__.__name__}")

print(f"Extraction: {extracted_data.model_dump_json()}")--------------------------------------------------------------------------- BadRequestError Traceback (most recent call last) File <command-4812577713911438>, line 1 ----> 1 response = extract_and_show_document("samples/Coursera-certificate.pdf", tools) 2 extracted_data = response.choices[0].message.tool_calls[0].function.parsed_arguments 4 print(f"Classification: {extracted_data.__class__.__name__}") File <command-4812577713911398>, line 48, in extract_and_show_document(document, tools) 26 base64_document = encode_image_to_base64(document) 27 completion_args = { 28 "messages": [ 29 { (...) 45 "tools": tools 46 } ---> 48 chat_completion = client.beta.chat.completions.parse(**completion_args) 50 display(Image(document)) 51 # print(response.parsed.model_dump_json(indent=4)) File /local_disk0/.ephemeral_nfs/envs/pythonEnv-8178295e-052c-4ae3-9548-4d8e1c58d0af/lib/python3.10/site-packages/openai/resources/chat/completions/completions.py:180, in Completions.parse(self, messages, model, audio, response_format, frequency_penalty, function_call, functions, logit_bias, logprobs, max_completion_tokens, max_tokens, metadata, modalities, n, parallel_tool_calls, prediction, presence_penalty, reasoning_effort, seed, service_tier, stop, store, stream_options, temperature, tool_choice, tools, top_logprobs, top_p, user, web_search_options, extra_headers, extra_query, extra_body, timeout) 173 def parser(raw_completion: ChatCompletion) -> ParsedChatCompletion[ResponseFormatT]: 174 return _parse_chat_completion( 175 response_format=response_format, 176 chat_completion=raw_completion, 177 input_tools=tools, 178 ) --> 180 return self._post( 181 "/chat/completions", 182 body=maybe_transform( 183 { 184 "messages": messages, 185 "model": model, 186 "audio": audio, 187 "frequency_penalty": frequency_penalty, 188 "function_call": function_call, 189 "functions": functions, 190 "logit_bias": logit_bias, 191 "logprobs": logprobs, 192 "max_completion_tokens": max_completion_tokens, 193 "max_tokens": max_tokens, 194 "metadata": metadata, 195 "modalities": modalities, 196 "n": n, 197 "parallel_tool_calls": parallel_tool_calls, 198 "prediction": prediction, 199 "presence_penalty": presence_penalty, 200 "reasoning_effort": reasoning_effort, 201 "response_format": _type_to_response_format(response_format), 202 "seed": seed, 203 "service_tier": service_tier, 204 "stop": stop, 205 "store": store, 206 "stream": False, 207 "stream_options": stream_options, 208 "temperature": temperature, 209 "tool_choice": tool_choice, 210 "tools": tools, 211 "top_logprobs": top_logprobs, 212 "top_p": top_p, 213 "user": user, 214 "web_search_options": web_search_options, 215 }, 216 completion_create_params.CompletionCreateParams, 217 ), 218 options=make_request_options( 219 extra_headers=extra_headers, 220 extra_query=extra_query, 221 extra_body=extra_body, 222 timeout=timeout, 223 post_parser=parser, 224 ), 225 # we turn the `ChatCompletion` instance into a `ParsedChatCompletion` 226 # in the `parser` function above 227 cast_to=cast(Type[ParsedChatCompletion[ResponseFormatT]], ChatCompletion), 228 stream=False, 229 ) File /local_disk0/.ephemeral_nfs/envs/pythonEnv-8178295e-052c-4ae3-9548-4d8e1c58d0af/lib/python3.10/site-packages/openai/_base_client.py:1249, in SyncAPIClient.post(self, path, cast_to, body, options, files, stream, stream_cls) 1235 def post( 1236 self, 1237 path: str, (...) 1244 stream_cls: type[_StreamT] | None = None, 1245 ) -> ResponseT | _StreamT: 1246 opts = FinalRequestOptions.construct( 1247 method="post", url=path, json_data=body, files=to_httpx_files(files), **options 1248 ) -> 1249 return cast(ResponseT, self.request(cast_to, opts, stream=stream, stream_cls=stream_cls)) File /local_disk0/.ephemeral_nfs/envs/pythonEnv-8178295e-052c-4ae3-9548-4d8e1c58d0af/lib/python3.10/site-packages/openai/_base_client.py:1037, in SyncAPIClient.request(self, cast_to, options, stream, stream_cls) 1034 err.response.read() 1036 log.debug("Re-raising status error") -> 1037 raise self._make_status_error_from_response(err.response) from None 1039 break 1041 assert response is not None, "could not resolve response (should never happen)" BadRequestError: Error code: 400 - {'error_code': 'BAD_REQUEST', 'message': '{"external_model_provider":"openai","external_model_error":{"error":{"message":"You uploaded an unsupported image. Please make sure your image has of one the following formats: [\'png\', \'jpeg\', \'gif\', \'webp\'].","type":"invalid_request_error","param":null,"code":"invalid_image_format"}}}'}

response = extract_and_show_document("samples/berkeley-certificate.pdf", tools)

extracted_data = response.choices[0].message.tool_calls[0].function.parsed_arguments

print(f"Classification: {extracted_data.__class__.__name__}")

print(f"Extraction: {extracted_data.model_dump_json()}")--------------------------------------------------------------------------- BadRequestError Traceback (most recent call last) File <command-4812577713911439>, line 1 ----> 1 response = extract_and_show_document("samples/berkeley-certificate.pdf", tools) 2 extracted_data = response.choices[0].message.tool_calls[0].function.parsed_arguments 4 print(f"Classification: {extracted_data.__class__.__name__}") File <command-4812577713911398>, line 48, in extract_and_show_document(document, tools) 26 base64_document = encode_image_to_base64(document) 27 completion_args = { 28 "messages": [ 29 { (...) 45 "tools": tools 46 } ---> 48 chat_completion = client.beta.chat.completions.parse(**completion_args) 50 display(Image(document)) 51 # print(response.parsed.model_dump_json(indent=4)) File /local_disk0/.ephemeral_nfs/envs/pythonEnv-8178295e-052c-4ae3-9548-4d8e1c58d0af/lib/python3.10/site-packages/openai/resources/chat/completions/completions.py:180, in Completions.parse(self, messages, model, audio, response_format, frequency_penalty, function_call, functions, logit_bias, logprobs, max_completion_tokens, max_tokens, metadata, modalities, n, parallel_tool_calls, prediction, presence_penalty, reasoning_effort, seed, service_tier, stop, store, stream_options, temperature, tool_choice, tools, top_logprobs, top_p, user, web_search_options, extra_headers, extra_query, extra_body, timeout) 173 def parser(raw_completion: ChatCompletion) -> ParsedChatCompletion[ResponseFormatT]: 174 return _parse_chat_completion( 175 response_format=response_format, 176 chat_completion=raw_completion, 177 input_tools=tools, 178 ) --> 180 return self._post( 181 "/chat/completions", 182 body=maybe_transform( 183 { 184 "messages": messages, 185 "model": model, 186 "audio": audio, 187 "frequency_penalty": frequency_penalty, 188 "function_call": function_call, 189 "functions": functions, 190 "logit_bias": logit_bias, 191 "logprobs": logprobs, 192 "max_completion_tokens": max_completion_tokens, 193 "max_tokens": max_tokens, 194 "metadata": metadata, 195 "modalities": modalities, 196 "n": n, 197 "parallel_tool_calls": parallel_tool_calls, 198 "prediction": prediction, 199 "presence_penalty": presence_penalty, 200 "reasoning_effort": reasoning_effort, 201 "response_format": _type_to_response_format(response_format), 202 "seed": seed, 203 "service_tier": service_tier, 204 "stop": stop, 205 "store": store, 206 "stream": False, 207 "stream_options": stream_options, 208 "temperature": temperature, 209 "tool_choice": tool_choice, 210 "tools": tools, 211 "top_logprobs": top_logprobs, 212 "top_p": top_p, 213 "user": user, 214 "web_search_options": web_search_options, 215 }, 216 completion_create_params.CompletionCreateParams, 217 ), 218 options=make_request_options( 219 extra_headers=extra_headers, 220 extra_query=extra_query, 221 extra_body=extra_body, 222 timeout=timeout, 223 post_parser=parser, 224 ), 225 # we turn the `ChatCompletion` instance into a `ParsedChatCompletion` 226 # in the `parser` function above 227 cast_to=cast(Type[ParsedChatCompletion[ResponseFormatT]], ChatCompletion), 228 stream=False, 229 ) File /local_disk0/.ephemeral_nfs/envs/pythonEnv-8178295e-052c-4ae3-9548-4d8e1c58d0af/lib/python3.10/site-packages/openai/_base_client.py:1249, in SyncAPIClient.post(self, path, cast_to, body, options, files, stream, stream_cls) 1235 def post( 1236 self, 1237 path: str, (...) 1244 stream_cls: type[_StreamT] | None = None, 1245 ) -> ResponseT | _StreamT: 1246 opts = FinalRequestOptions.construct( 1247 method="post", url=path, json_data=body, files=to_httpx_files(files), **options 1248 ) -> 1249 return cast(ResponseT, self.request(cast_to, opts, stream=stream, stream_cls=stream_cls)) File /local_disk0/.ephemeral_nfs/envs/pythonEnv-8178295e-052c-4ae3-9548-4d8e1c58d0af/lib/python3.10/site-packages/openai/_base_client.py:1037, in SyncAPIClient.request(self, cast_to, options, stream, stream_cls) 1034 err.response.read() 1036 log.debug("Re-raising status error") -> 1037 raise self._make_status_error_from_response(err.response) from None 1039 break 1041 assert response is not None, "could not resolve response (should never happen)" BadRequestError: Error code: 400 - {'error_code': 'BAD_REQUEST', 'message': '{"external_model_provider":"openai","external_model_error":{"error":{"message":"You uploaded an unsupported image. Please make sure your image has of one the following formats: [\'png\', \'jpeg\', \'gif\', \'webp\'].","type":"invalid_request_error","param":null,"code":"invalid_image_format"}}}'}